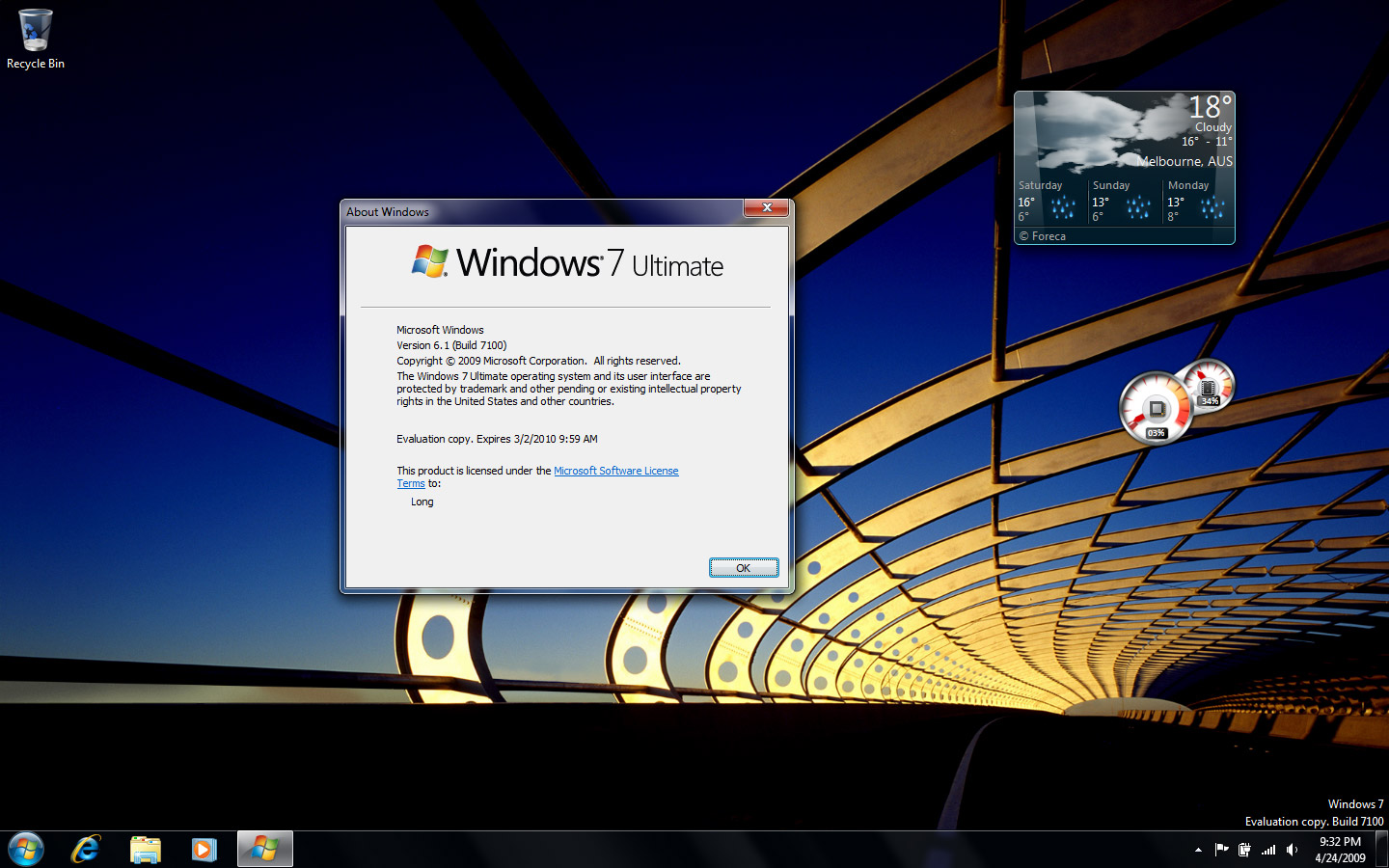

Windows "Vienna" (formerly known as Blackcomb) is Microsoft's codename for a future version of Microsoft Windows, originally announced in February 2000, but has since been subject to major delays and rescheduling.

The code name "Blackcomb" was originally assigned to a version of Windows that was planned to follow Windows XP (codenamed "Whistler"; both named after the Whistler-Blackcomb resort) in both client and server

versions. However, in August 2001, the release of Blackcomb was pushed back several years and Vista (originally codenamed "Longhorn" after a bar in the Whistler Blackcomb Resort) was announced as a release between XP and Blackcomb.

Since then, the status of Blackcomb has undergone many alterations and PR manipulations, ranging from Blackcomb being scrapped entirely, to becoming a server-only release. As of 2006, it is still planned as both a client and server

release with a current release estimate of anytime between 2009 and 2012, although no firm release date or target has yet been publicized.

WINDOWS VIENNA

WINDOWS XP

WINDOWS VISTA

WINDOWS SEVEN

In January 2006, "Blackcomb" was renamed to "Vienna".

Originally, internal sources pitched Blackcomb as being not just a major revision of Windows, but a complete departure from the way users today typically think about interacting with a computer. While Windows Vista is intended to be a technologies-based release, with some added UI sparkle (in the form of the Windows Aero set of

technologies and guidelines), Vienna is targeted directly at revolutionizing the way users of the product interact with their PCs.

For instance, the "Start" philosophy, introduced in Windows 95, may be completely replaced by the "new interface" which was said in 1999 to be scheduled for "Vienna"

(before being moved to Vista ("Longhorn") and then back again to "Vienna").

The Explorer shell will be replaced in its entirety, with features such as the taskbar being replaced by a new concept based on the last 10 years of R&D at the Microsoft "VIBE" research lab. Projects such as GroupBar and LayoutBar are expected to make an appearance, allowing users to more effectively manage and keep track of their applications and documents while in use, and a new way of launching applications is expected—among other ideas, Microsoft is investigating a pie menu-type circular interface, similar in function to the dock in Mac OS X.

Several other features originally planned for Windows Vista may be part of "Vienna", though they may be released independently when they are finished.

"Vienna" will also feature the "sandboxed" approach discussed during the Alpha/White Box development phase for Longhorn. All non-managed code will run in a sandboxed environment where access to the "outside world" is restricted by the operating system. Access to raw sockets will be disabled from within the sandbox, as will direct access to the file

system, hardware abstraction layer (HAL), and complete memory addressing. All access to outside applications, files, and protocols will be regulated by the operating system, and any malicious activity will be halted immediately. If this approach is successful, it bodes very well for security and safety, as it is virtually impossible for a malicious application to cause any damage to the system if it is locked in what is effectively a glass box.

Another interesting feature mentioned by Bill Gates is "a pervasive typing line that will recognize the sentence that [the user is] typing in." The implications of this could be as simple as a "complete as you type" function as found in most modern search engines, (e.g. Google Suggest) or as complex as being able to give verbal commands to the PC without any concern for syntax. This former feature has been incorporated to an extent in Windows Vista.

Microsoft has stated that "Vienna" will be available in both 32-bit and 64-bit for the client version, in order to ease the industry's transition from 32-bit to 64-bit computing. Vienna Server is expected to support only 64-bit server systems. There will be continued backward compatibility with 32-bit applications, but 16-bit Windows and MS-DOS applications will not be supported as in Windows Vista 64-bit versions. They are already unsupported in 64-bit versions of XP and Server 2003...

Any doubts please post comments so that i can learn more..

I think by knowing this information you can surely know the difference between the other windows os's

![[Capture.JPG]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiYTndAYiM9lG8KObf8ZpZ-YQpeYXXWYdroSFFTtcfmIC3OR1ZU0Na6-a2EXh7XAiXvogHKTN2A3VZiCeY_5oAldaNeWqE0qTdkteCDhzc86PEPGXvhc98zeCEQHacOASKWXhRz-fH3uvns/s1600/Capture.JPG)